Start Your AI Journey Today

- Access 100+ AI APIs in a single platform.

- Compare and deploy AI models effortlessly.

- Pay-as-you-go with no upfront fees.

This article explores the best alternatives to Requesty, highlighting platforms that provide greater flexibility, smarter pricing, and specialized features for integrating Large Language Models (LLMs). It compares options like Eden AI, Portkey, LiteLLM, OpenRouter, and Kong AI Gateway, showing how they deliver cost-effective, scalable, and future-proof solutions for developers and businesses looking to simplify AI adoption.

Large Language Models (LLMs) have become essential tools for developers, businesses, and researchers, powering applications like chatbots, content creation, and customer support. Popular LLMs include OpenAI’s GPT-5, Anthropic’s Claude, Meta’s LLaMA, and Mistral’s Mixtral.

To integrate these models, developers typically access them via an API, but managing multiple accounts, billing, and API keys can be cumbersome. Model aggregators solve this by offering a single API to switch between different LLMs easily.

Requesty is one of these solutions, providing access to multiple AI providers through a unified API. It simplifies integration by routing requests to different models from a single endpoint. However, Requesty isn’t the only option.

Several alternatives today offer better pricing, more flexibility, or specialized enterprise features. Among these, Eden AI stands out as the best Requesty alternative, delivering cost-effective AI access, enterprise-grade scalability, and support for both proprietary and open-source models.

Eden AI is more than just a model aggregator. It’s a complete AI platform that lets developers access dozens of providers and services through a single API. In addition to leading LLMs such as GPT-5, Claude, LLaMA, and Mixtral, it supports a wide set of features including translation, chat, image generation, text-to-speech, and much more. This makes it a strong choice for teams that want to combine different AI capabilities without having to manage multiple accounts and integrations.

What sets Eden AI apart is its cost-optimization engine. Every request can be routed to the most efficient and affordable provider, which helps reduce expenses while maintaining high-quality outputs. On top of that, reliability is built in with caching, fallback providers, and usage monitoring to avoid unnecessary failures or duplicate charges.

Unlike Requesty, Eden AI is designed with flexibility in mind. There’s no vendor lock-in, meaning developers can easily experiment with new models as soon as they’re released. Whether your priority is price, performance, or access to the latest innovations, Eden AI makes switching between providers seamless, positioning it as the most adaptable and future-proof alternative to Requesty.

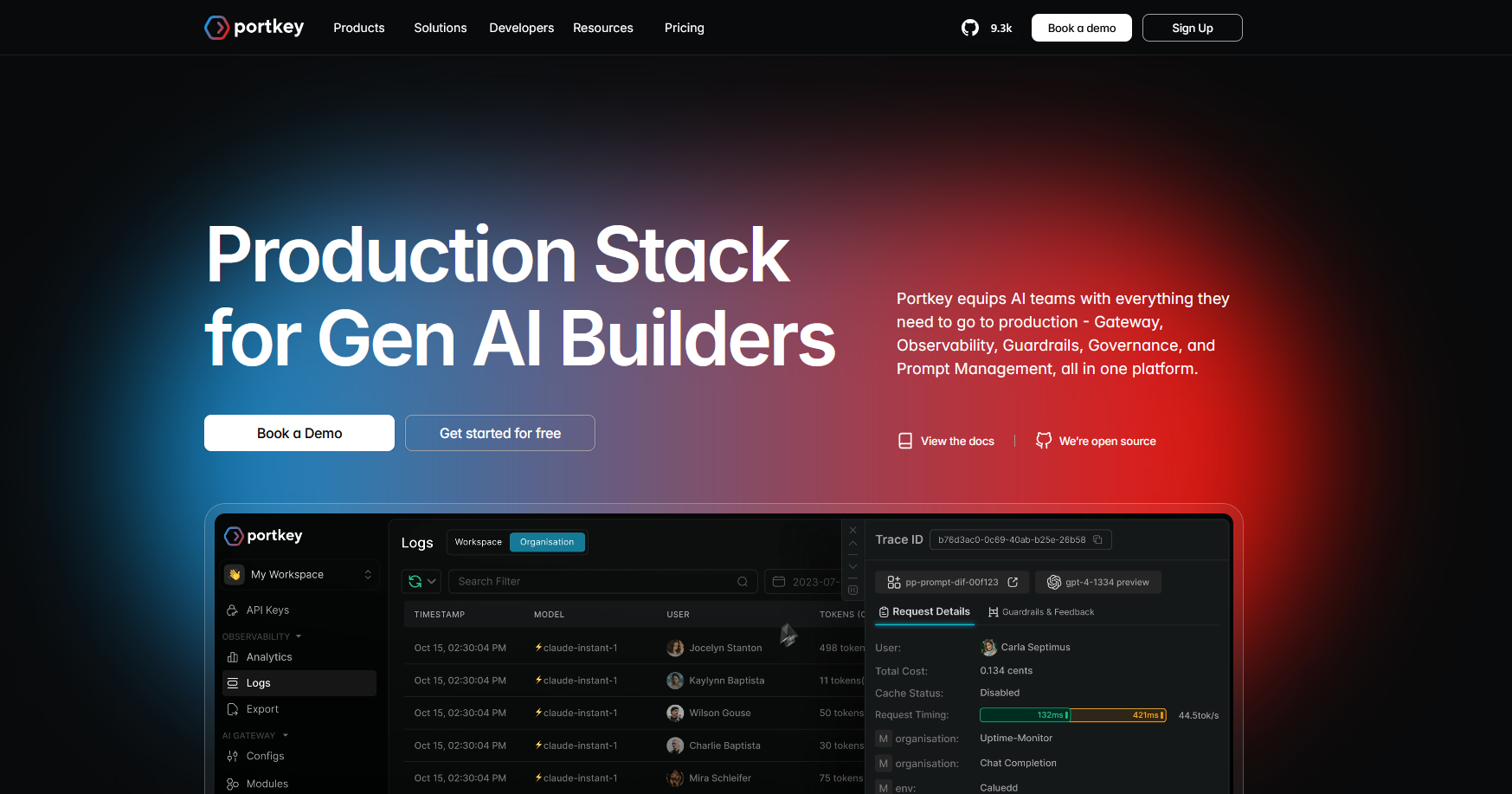

Portkey is another strong alternative to Requesty, designed to help developers manage and optimize LLM usage at scale. It supports integration with multiple AI providers and offers advanced features such as smart routing, caching, and rate limiting. One of its standout advantages is its ability to automatically route requests to the most suitable model, balancing cost, speed, and reliability.

Beyond routing, Portkey also includes cost-optimization features, such as caching repeated queries, and monitoring tools that give developers clear insights into usage and performance. This makes it easier to identify bottlenecks, control spending, and make data-driven decisions.

With its developer-friendly API, integration is straightforward, allowing teams to get started quickly. Portkey also provides tiered pricing, including a free tier with limited usage for experimentation and premium plans for higher-volume needs. For businesses seeking a cost-effective and optimized alternative to Requesty, Portkey is a solid choice.

LiteLLM is an open-source alternative to Requesty, built for developers who want maximum control and transparency over their AI integrations. Instead of relying on a managed service, LiteLLM lets you self-host and manage your own API requests, giving you the freedom to choose your infrastructure and avoid vendor lock-in.

One of LiteLLM’s biggest strengths is its flexibility. Businesses can deploy it on their preferred cloud provider, configure custom routing rules, and maintain full control over data privacy and security. This makes it an attractive option for teams with strict compliance requirements or those who want to customize their AI stack without being tied to a third-party platform like Requesty.

LiteLLM is free and open-source, available directly on GitHub, and backed by an active developer community. However, it does require more technical expertise to set up and manage compared to managed platforms. For teams willing to invest in that setup effort, LiteLLM offers a highly customizable and transparent alternative to Requesty.

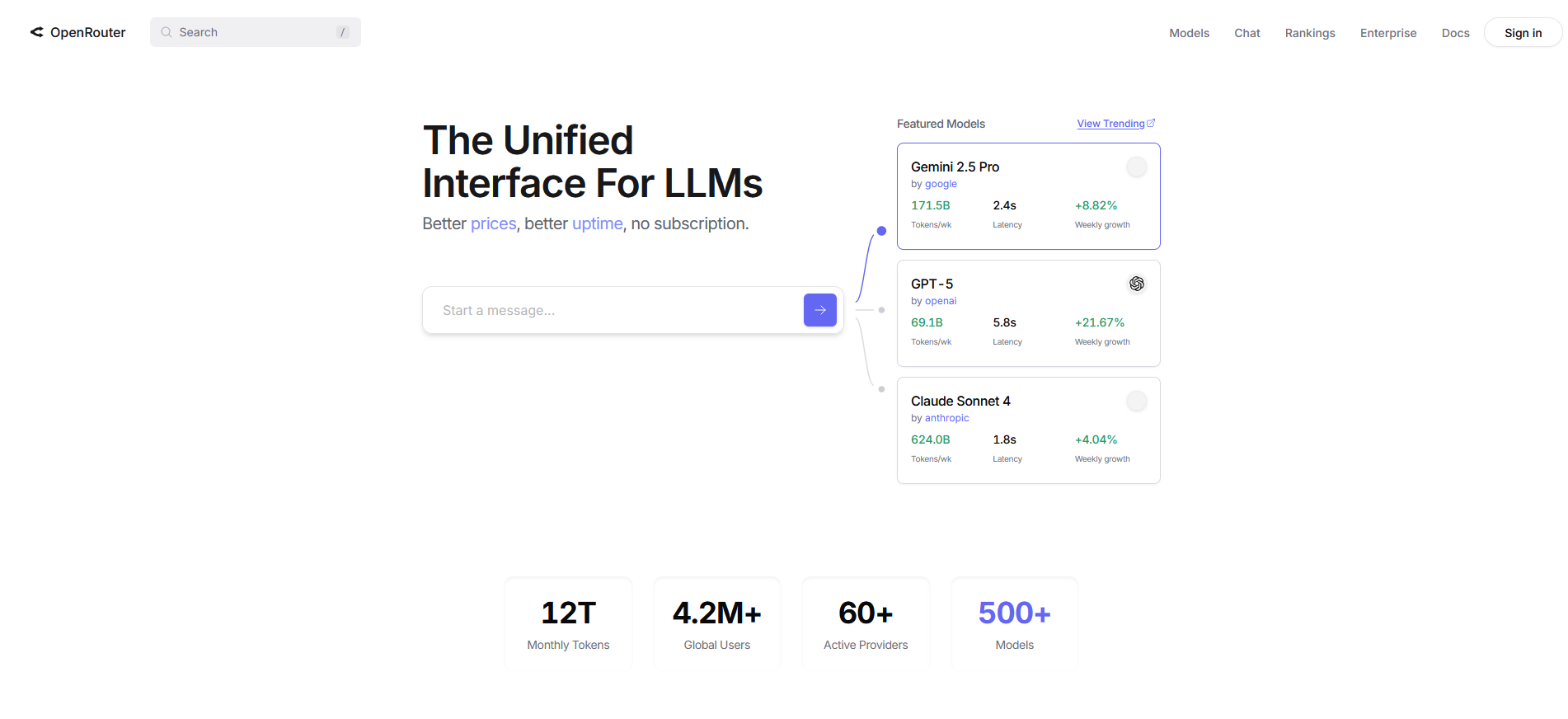

OpenRouter is one of the most widely used LLM aggregators, giving developers access to multiple providers through a single API. It supports models like GPT-5, Claude, LLaMA, and Mixtral, and makes it simple to route requests across them without managing separate accounts or keys.

Its strengths are ease of integration and a large community of developers already building on it. However, OpenRouter mainly focuses on text models and doesn’t offer the broader multi-modal services (like OCR, speech-to-text, or translation) that some alternatives provide.

For teams whose primary goal is quick, reliable access to top LLMs, OpenRouter remains a solid alternative to Requesty.

Kong AI Gateway is an open-source extension of Kong Gateway that makes it easier to integrate and manage multiple LLMs through a single API. It supports major providers such as OpenAI, Anthropic, Cohere, Mistral, and Meta’s LLaMA, allowing developers to switch between models without changing their application code.

Some of its key features include centralized credential management, real-time metrics, no-code AI integrations, and advanced prompt engineering. Together, these capabilities help organizations streamline AI adoption while maintaining strong security, governance, and compliance controls.

As a free, self-hosted alternative to Requesty, Kong AI Gateway offers scalability and full customization for teams that want to run their own infrastructure. However, unlike managed services such as Requesty, it requires more setup and ongoing maintenance, which may not be ideal for companies looking for a plug-and-play solution. For teams that prioritize control, privacy, and compliance, Kong AI Gateway is a compelling option.

While Requesty is a popular option for accessing multiple LLMs through a single API, there are several strong alternatives that offer better pricing, flexibility, and features. The right choice depends on your priorities, whether that’s affordability, enterprise-grade governance, scalability, or open-source access.

Among them, Eden AI stands out as the best alternative. With its unified API, cost-optimized routing, multi-modal AI support, and no vendor lock-in, Eden AI makes it easy for businesses and developers to access a wide range of AI services without juggling multiple providers. By choosing Eden AI, you gain access to the latest models like GPT-5 alongside open-source options, while keeping costs under control and ensuring long-term flexibility.

Whether you’re building chatbots, research tools, AI-powered assistants, or automated workflows, Eden AI ensures access to the best-performing and most cost-efficient models, all without the hassle of managing separate integrations.

You can start building right away. If you have any questions, feel free to chat with us!

Get startedContact sales